tldr; mysql2 gem specifies a default wait_timeout of 2147483 seconds that overrides whatever you set on a mysql server

I spent a whole day yesterday trying to debug why updating mysql’s wait_timeout wasn’t working.

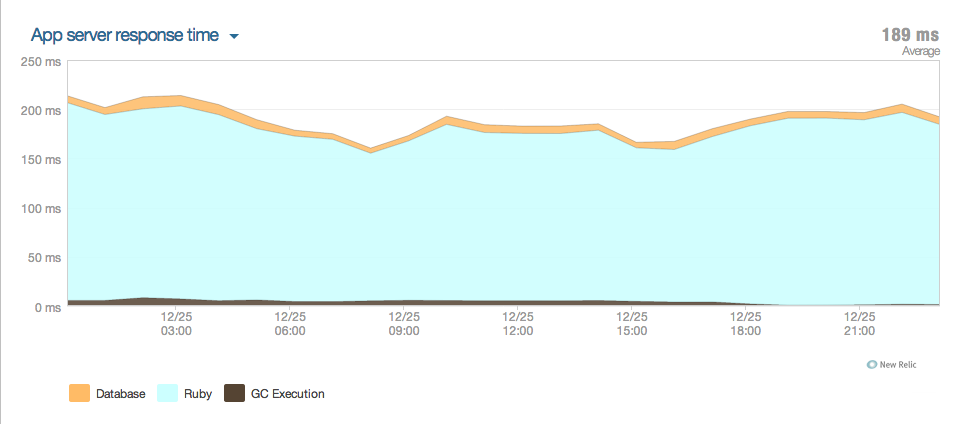

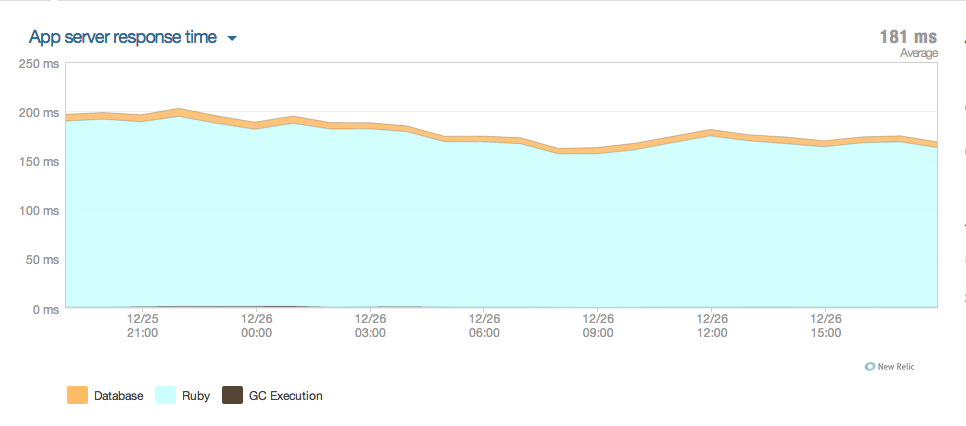

Initially, we were having some trouble with a small mysql ec2 instance that was running out of memory because

- it had less ram than other boxes doing the same thing (we’d forgotten to upgrade it)

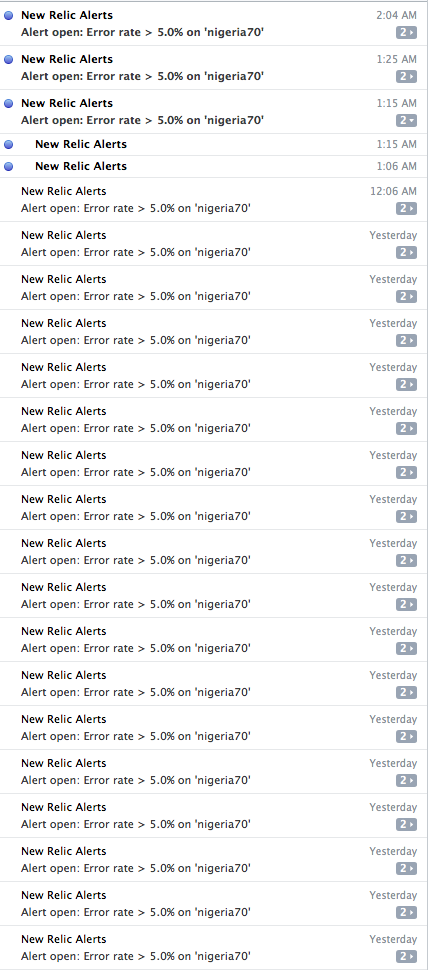

- it would spawn too many connections (12k or so) which take up memory and because there was no swap specified, when mysql grew too big, it would get killed by the os.

All pretty silly really. It could have been solved by just getting a bigger box, but there were a bunch of reasons why that wasn’t possible right at that second. And there were similar boxes that had been overlooked but were doing fine, difference was they had fewer connections … about 8k vs our 12k.

In checking out the connections, I realized almost 80% of them were sleeping and had been sleeping for a long time. I figured that setting a low enough wait_timeout (something like 120 seconds or so … average user session limit) on that box would solve the problem. I was worried about “mysql server has gone away” airbrake errors showing up, but I figured it was worth a shot to see if it relieved the memory pressure enough to stop worrying about this particular mysql server dying every few days.

We updated the settings from the mysql console (setting the GLOBAL wait_timeout there), restarted the server, and waited …but the mysql connections just traipsed past the wait_timeout limit.

odd.

Initially I thought It’d been done incorrectly, so I manually edited the my.cnf file and restarted the mysql process again, which was painful because even though this was a small box, it had about 11G of mysql in memory that it had to flush.

Again the connections came back up and went past the limit we set.

After investigating for a while, I remembered having encountered a similar problem a few months ago when I tried to do the same thing on a personal project. I had been able to set the wait_timeout on my production server but never got it to work on my local environment.

I raced home and started looking around to see what the difference was between the two. That’s when I realized that I had specified a wait_timeout in the database.yml of my production box but not my dev box. After googling for a bit I finally pieced it all together.

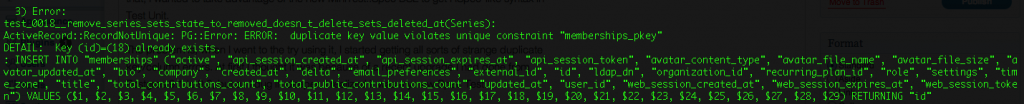

It turns out that the mysql2 gem does this thing …

ConnectionPool uses wait_timeout to decide how long to wait for a connection checkout on a full connection pool, and it defaults to 5 seconds.

mysql2_adapter uses this same wait_timeout , but passes it directly to mysql, and defaults to a much larger 2592000!!

Things have changed a little from that github issue being filed, but essentially it substitutes a default wait_timeout value of about 25 days (2147483s) of its own as the wait_timeout value (probably the session version of wait_timeout) that the mysql connection uses, basically overriding whatever setting we specify on the server.

By setting a wait_timeout: value in config/database.yml the timeout works as it should. When the connection gets killed, however, you get the infamous “mysql server has gone away” errors.

By specifying an accompanying

reconnect: true

option in database.yml, this is fixed, but in a quirky way.

Everytime the connection is reused, say you run an active record command at the console … then run another one, the connection’s timer is reset, BUT after that the connection it uses the wait_timeout setting that you configured from mysql directly.

So if you specified a 10 second timeout in your database.yml, and 15 seconds in your my.cnf file, then you logged into the console and ran an Active Record command.

Mysql would spawn a connection that would sleep after it was done for 10 seconds before being closed by mysql.

If before the end of that 10 seconds you ran another command, it would execute it and then start to sleep again, but now (and every time after) it would sleep for the 15 seconds specified in my.cnf before being killed by mysql.

Hope that piece of trivia brightens up your day 😀